Your Lack of Ontology Will Be Your AI Boondoggle

If you’ve launched an AI pilot that promised to revolutionize decision-making, you know how this story ends. Six months in, the models are producing inconsistent outputs. Business users don’t trust the recommendations. IT is scrambling to integrate data from systems that speak different languages. The executive sponsor is asking uncomfortable questions about ROI.

The problem isn’t your models. The problem is you’re building AI on quicksand.

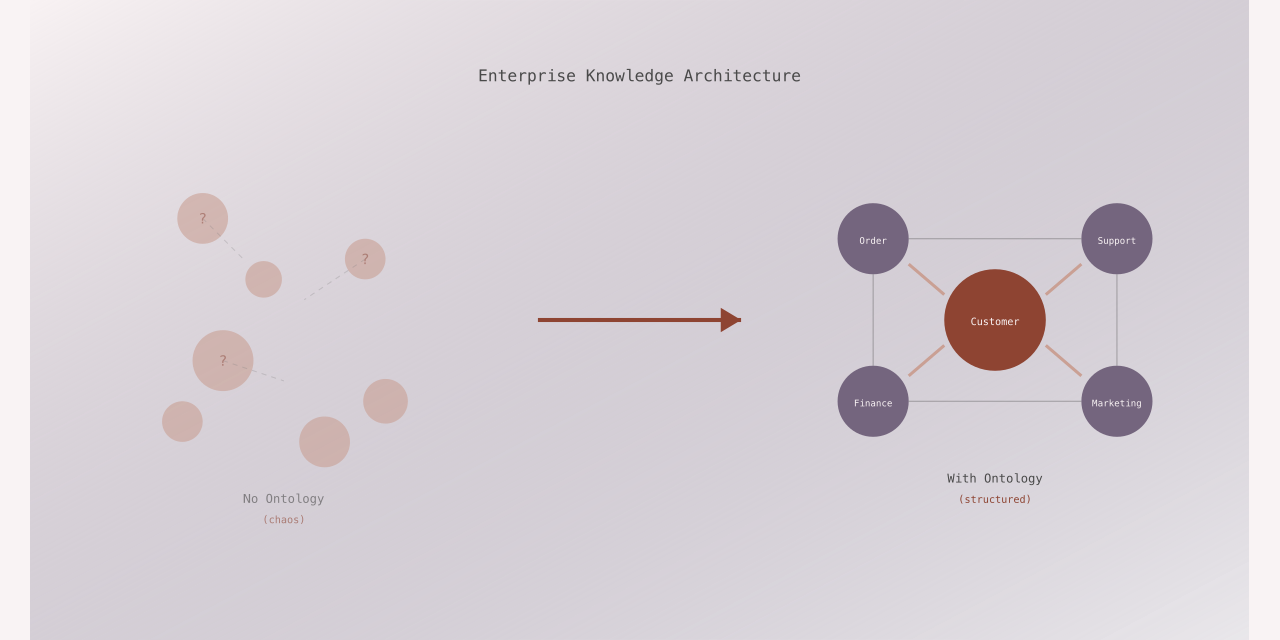

A well-formed enterprise ontology acts as a digital nervous system, providing the deterministic logic and contextual grounding required to move artificial intelligence from experimental pilots to core operational dependencies. While companies pour millions into the latest language models and ML platforms, they’re missing the foundational layer that determines whether AI succeeds or becomes your next expensive write-off.

Beyond Tags: Why Technical Monitoring Was Just the Beginning

In modern technology organizations, a familiar chaos often reigns. Teams operate with fragmented monitoring tools, leading to critical blind spots. Ownership of services is ambiguous, and dependencies between systems are opaque and undocumented. This disconnect creates a significant problem: it’s nearly impossible to trace the line from a low-level technical metric to a tangible business outcome. When an incident occurs, the result is slower detection, prolonged resolution, and an erosion of customer trust.

The solution is not another dashboard or monitoring tool. It’s something more foundational: an ontology: an explicit model of your services, platforms, resources, teams, and their relationships. It provides a shared language and a unified context for your entire technology estate.

This technical foundation was always just the tip of the iceberg. For enterprises serious about AI, the ontology must extend far beyond infrastructure monitoring to become the semantic backbone of the entire organization.

The Enterprise Ontology: Your Organization’s Digital Twin

An enterprise ontology is not a data model. Data models describe structure; ontologies describe meaning, relationships, and the decision-making processes that define how your business actually operates.

The Three Pillars of a Robust Enterprise Ontology

1. Contextual Data Modeling

Rather than merely organizing raw records, the ontology integrates information as interconnected objects and links, making complex real-world operations understandable for both human experts and automated systems. This layer captures:

- Technical metadata: Schema definitions, data lineage, system dependencies

- Business context: Policies, classifications, compliance requirements, usage restrictions

- Operational relationships: How entities interact, depend on each other, and evolve over time

Consider a “Customer” entity in an e-commerce platform. In a traditional data model, this is a table with columns. In an ontology, a Customer is a rich object that:

- Links to Orders (Purchase relationship)

- Connects to Support Tickets (Service relationship)

- Associates with Marketing Campaigns (Engagement relationship)

- Ties to Finance records (Billing relationship)

- Maps to Compliance requirements (Privacy relationship)

Each relationship carries semantic weight. When an AI agent needs to evaluate customer churn risk, it doesn’t just analyze purchase frequency, it understands the full context of service quality, engagement patterns, billing disputes, and privacy preferences.

2. Expressive Logic

This component encapsulates organizational rules, probabilities, and forecasting models. Logic can be applied “bottom-up” through machine learning or “top-down” via explicit business rules and semantic reasoning.

# Example: Order Approval Logic in Ontology

OrderApproval:

rules:

- condition: order.value < 10000 AND customer.creditScore > 700

action: AUTO_APPROVE

authority: SYSTEM

- condition: order.value >= 10000 AND order.value < 100000

action: REQUIRE_MANAGER_APPROVAL

authority: DEPARTMENT_MANAGER

- condition: order.value >= 100000 OR customer.riskLevel == "HIGH"

action: REQUIRE_EXECUTIVE_APPROVAL

authority: FINANCE_EXECUTIVE

ai_enhancement:

model: credit_risk_predictor

override_threshold: 0.85

human_in_loop: REQUIRED for flagged_casesThis isn’t just documentation: it’s executable logic that AI systems can reason over, audit trails can reference, and compliance teams can verify.

3. Operational Actions

The ontology defines the “verbs” of the enterprise: the actions that autonomous agents or human operators can take to manifest decisions in the physical or digital world. Capturing the outcomes of these actions creates a feedback loop, allowing the system to learn and evolve based on past results.

Actions in an ontology are not just API calls: they’re semantically meaningful operations with:

- Preconditions: What must be true before the action can execute

- Postconditions: Expected state changes after execution

- Permissions: Who or what can perform this action

- Audit requirements: What must be logged and monitored

- Rollback procedures: How to undo or compensate for failures

The Cross-Enterprise Ontology: Finance, HR, Sales, and Operations

A holistic enterprise ontology extends across all business functions, creating a unified semantic layer that enables AI to reason about the entire organization.

Finance: From Transactions to Strategic Insight

Financial ontologies model more than ledgers and accounts. They encode:

- Cost allocation structures: How expenses map to products, projects, and business units

- Revenue recognition rules: Complex logic for subscription, usage-based, and milestone billing

- Compliance frameworks: Regulatory requirements embedded as first-class constraints

- Risk relationships: How financial instruments, counterparties, and market conditions interact

When integrated with AI, finance ontologies enable comprehensive cost analysis. Consider this hypothetical example:

Query: "What's the full cost impact of shutting down the legacy payment service?"

AI Response with Ontology Context (hypothetical example):

- Direct infrastructure cost savings: $45K/month

- License savings: $12K/month

- BUT: Migration cost to new service: $280K one-time

- Risk: 3 revenue-critical integrations depend on legacy service

- Recommended action: Migrate critical integrations first (4-6 months)

- Break-even timeline: 14 months post-migrationWithout ontology, this query requires manual analysis across finance, engineering, product, and sales systems. With ontology, AI can traverse the semantic relationships to provide comprehensive, contextualized answers.

HR: People, Skills, and Organizational Dynamics

HR ontologies transform workforce management from record-keeping to strategic capability modeling:

- Skill taxonomies: Standardized definitions of competencies, proficiency levels, and skill relationships

- Role structures: How positions relate to teams, responsibilities, and career paths

- Performance frameworks: Linking individual contributions to team goals and business outcomes

- Compensation logic: Pay bands, equity structures, and incentive models

Consider talent acquisition enhanced by ontology:

# Ontology-Powered Candidate Matching

class CandidateEvaluator:

def evaluate_fit(self, candidate, position):

# Direct skill match

skill_overlap = self.ontology.match_skills(

candidate.skills,

position.required_skills

)

# Team compatibility

team_fit = self.ontology.analyze_team_dynamics(

candidate.work_style,

position.team.culture_profile

)

# Career trajectory alignment

growth_potential = self.ontology.project_career_path(

candidate.experience,

position.growth_opportunities

)

# Compensation competitiveness

market_position = self.ontology.compare_compensation(

candidate.expectations,

position.compensation_band,

market_data

)

return HiringRecommendation(

skill_score=skill_overlap,

cultural_fit=team_fit,

growth_alignment=growth_potential,

compensation_fit=market_position

)This goes beyond keyword matching or basic ML classification. The ontology understands that “Python development” relates to “software engineering,” which connects to “backend systems,” which supports “platform infrastructure”, semantic relationships that simple models miss.

Sales: From Leads to Revenue Orchestration

Sales ontologies unify customer journeys, revenue operations, and market intelligence:

- Customer lifecycle modeling: Stages, transitions, and success criteria

- Product catalogs: Features, configurations, pricing models, and competitive positioning

- Territory management: Geographic, vertical, and account relationships

- Pipeline analytics: Deal stages, conversion probabilities, and forecasting logic

The power of ontology in sales becomes clear when AI needs to optimize resource allocation. Consider this hypothetical scenario:

Scenario: "Which deals should we prioritize this quarter?"

Ontology-Informed AI Analysis (hypothetical example):

1. Deal $1.2M (Healthcare, Midwest)

- Close probability: 68%

- Requirements match: 94% (existing product)

- Resource needs: Standard implementation

- Strategic value: NEW vertical entry

- Recommendation: HIGH PRIORITY

2. Deal $3.5M (FinTech, East Coast)

- Close probability: 45%

- Requirements match: 62% (needs customization)

- Resource needs: 6 months engineering investment

- Strategic value: Existing customer expansion

- Recommendation: MEDIUM (resource constrained)

Context: Engineering capacity at 87%. New vertical (Healthcare)

aligns with Q2 strategic initiative. Prioritize Deal 1 for faster

close and strategic positioning.The ontology connects sales data, product capabilities, engineering capacity, and strategic priorities into a coherent decision framework.

Operations: The Integration Hub

Operations ontologies tie everything together, modeling:

- Supply chain relationships: Suppliers, materials, logistics, and inventory

- Manufacturing processes: Workflows, quality checkpoints, and resource requirements

- Service delivery: SLAs, incident management, and customer support workflows

- Asset management: Equipment lifecycles, maintenance schedules, and utilization tracking

For organizations implementing AI-powered operations, the ontology becomes the control plane:

# Hypothetical Example: Supply Chain Disruption Response

Event: SUPPLIER_DELAY

supplier: "ComponentCo"

affected_parts: ["PART-2847", "PART-3012"]

delay_estimate: "3 weeks"

Ontology-Driven AI Response:

impact_analysis:

- Production line 3: BLOCKED (requires PART-2847)

- Production line 5: AT_RISK (low inventory PART-3012)

- Customer orders: 14 orders delayed

- Revenue impact: $2.1M at risk

mitigation_options:

1. alternate_supplier:

name: "AlternativeSupply Inc"

lead_time: "10 days"

cost_premium: "+15%"

quality_score: "Approved"

2. inventory_reallocation:

source: "Warehouse B"

available_units: 120

transfer_time: "2 days"

impact: "Covers 8 of 14 orders"

3. production_resequence:

shift_to: "Production line 7"

efficiency_loss: "12%"

maintains_timeline: true

recommended_action:

primary: "Inventory reallocation + alternate supplier"

fallback: "Production resequence if supplier unavailable"

approval_required: "VP Operations"The Palantir Paradigm: Enterprise Ontology at Scale

Palantir Foundry’s success with enterprise ontology provides a concrete blueprint. Their approach transforms fragmented enterprise data into a semantic digital twin that enables both human operators and AI agents to make complex, context-aware decisions.

Key Architectural Patterns from Palantir

Semantic Layer Over Raw Data

Instead of forcing users to write SQL queries or understand database schemas, Palantir’s ontology presents business objects (Orders, Assets, Shipments) with meaningful properties and relationships. This abstraction:

- Makes data accessible to business users without technical expertise

- Enables natural language queries powered by LLMs that understand business semantics

- Ensures consistency, everyone references the same “Customer” concept

Governed Actions with Audit Trails

Every operation, whether triggered by a human or an AI agent, flows through ontology-defined Action Types. This means:

- All changes are validated against business rules and permissions

- Every action is traceable: who/what performed it, when, and why

- Rollback and remediation procedures are built into the action definitions

- Compliance becomes provable, not aspirational

Real-Time Operational Integration

The ontology isn’t a separate analytics layer: it’s synchronized with operational systems at extremely low latency. Changes in the real world (shipments move, inventory updates, financial transactions) are reflected in the ontology within seconds, enabling AI to make decisions on current, not stale, information.

AI as Functions Over Objects

Machine learning models are deployed as functions that operate on ontology objects. A fraud detection model doesn’t consume raw transaction tables, it evaluates “Payment” objects with rich context: customer history, merchant reputation, geographic patterns, and related accounts. This makes AI outputs:

- More accurate (more context leads to better predictions)

- More explainable (decisions reference business concepts, not table columns)

- More reusable (models work across similar object types)

Quantifiable Benefits: Why Ontology Delivers ROI

The business case for enterprise ontology is compelling when you examine the measurable improvements:

Reduction in AI Hallucinations

Large language models without grounding in verified knowledge produce plausible but incorrect outputs. Knowledge graphs anchored in ontologies reduce this dramatically:

- Fact verification: Models query the ontology for verified information before generating responses

- Semantic consistency: Relationships ensure models don’t contradict themselves across answers

- Provenance tracking: Every AI-generated fact can be traced to authoritative sources

Research on knowledge graph-enhanced AI systems shows significant reductions in hallucination rates, between 40-60% in domain-specific applications, as documented in studies on CoKGLM (Knowledge Graph-enhanced Language Models) and similar approaches that ground model outputs in verified semantic structures.

Computational Efficiency Gains

GraphRAG (Retrieval-Augmented Generation with knowledge graphs) demonstrates:

- 80% reduction in token consumption compared to traditional RAG approaches

- Complexity reduction from O(n²) to O(k·n) for multi-document reasoning tasks

- Faster inference times due to targeted, relationship-aware retrieval

Research published in ACL Anthology (2025) on GraphRAG implementations shows dramatic efficiency gains: one regulatory compliance use case comparing requirements across 50+ documents achieved token usage reduction from 1.8M to less than 2,500 tokens, a 720X improvement, while maintaining accuracy through knowledge graph-based contradiction detection.

Operational Efficiency

Industry reports and case studies from organizations implementing ontology-driven architectures show:

- 35-50% faster incident resolution due to clear ownership and dependency mapping

- 60% reduction in data integration effort when adding new systems

- 40% improvement in cross-functional collaboration through shared terminology

Strategic Agility

Perhaps most valuable: ontology-enabled enterprises gain strategic advantages including:

- Faster deployment of new AI capabilities through reusable semantic foundations

- More rapid adaptation to regulatory changes via explicit compliance logic

- Higher fidelity business scenario simulation using accurate operational models

The Implementation Roadmap: From Zero to Operational Ontology

Successful enterprise ontology adoption typically spans 12-24 months and follows a structured approach:

Phase 1: Readiness and Capability Mapping (1-2 months)

Assessment Activities:

- Data maturity audit across business units

- Technical infrastructure evaluation (APIs, integration patterns, data quality)

- Skills inventory (semantic modeling, knowledge engineering, domain expertise)

- Business gap analysis (where is decision-making slowest? Where does data integration fail?)

Deliverables:

- Readiness scorecard with maturity ratings per department

- Gap analysis matrix identifying critical deficiencies

- Stakeholder map showing champions and resistors

- Initial ROI projection

Phase 2: Strategic Planning and Governance (1-2 months)

Define the Foundation:

- Establish ontology governance board (cross-functional leadership)

- Select priority use cases (high value, achievable within 3-4 months)

- Choose technical standards (RDF/OWL vs. property graphs, tooling platforms)

- Design semantic modeling conventions and naming standards

Critical Success Factor: Start with business outcomes, not technical curiosity. Select use cases where:

- Current process pain is acute and measurable

- Data quality is reasonable (not perfect, but usable)

- Executive sponsorship is strong

- Success can be demonstrated within one quarter

Phase 3: Pilot Implementation (3-4 months)

Build and Validate:

- Model core entities for pilot domain (typically 20-40 object types)

- Integrate 3-5 key data sources

- Implement business logic and validation rules

- Deploy AI capability targeting the pilot use case

- Measure pilot against success criteria

Example Pilot: Finance Cost Allocation

Objective: Enable AI-assisted cost allocation across products and business units

Ontology Scope:

- Objects: CostCenter, Product, Team, Project, InfrastructureResource

- Relationships: allocates_to, depends_on, owned_by, contributes_to

- Logic: Cost allocation rules, chargeback policies, budget thresholds

Data Sources:

- ERP system (financial transactions)

- HRIS (team structures)

- Cloud provider APIs (infrastructure costs)

- Project management tools (initiatives and timelines)

AI Capability:

- Natural language cost queries

- Automated allocation recommendations

- Anomaly detection in spending patterns

- Budget variance explanations

Example Success Metrics (targets will vary by organization):

- Reduce manual allocation time significantly

- Increase allocation accuracy

- Enable more frequent cost reporting cyclesPhase 4: Scale and Enterprise Integration (6-12 months)

Expand the Foundation:

- Extend ontology to adjacent domains (HR, Sales, Operations)

- Standardize integration patterns and APIs

- Deploy ontology-backed AI capabilities across business units

- Train teams on semantic query tools and AI assistants

- Establish feedback loops for continuous ontology refinement

Change Management is Critical:

- Ontologies succeed when adopted, not just deployed

- Invest in training, documentation, and success stories

- Celebrate wins publicly; address failures transparently

- Build internal community of practice around ontology usage

Phase 5: Continuous Optimization (Ongoing)

Sustain and Evolve:

- Monitor ontology usage and quality metrics

- Retrain AI models as business logic evolves

- Expand to new use cases and domains

- Track ROI against original business case

- Adapt ontology as business strategy shifts

Overcoming Common Implementation Hurdles

Legacy System Integration

Challenge: Most enterprises have decades-old systems not designed for semantic modeling.

Solution: Model-first approach

- Define ontology based on business requirements, not existing schemas

- Build adapters that map legacy data to ontology objects

- Use automated knowledge extraction (NLP-powered) to identify concepts in unstructured data

- Incremental migration: new systems integrate natively; legacy systems use translation layers

Data Quality Issues

Challenge: “Our data isn’t clean enough for ontology.”

Reality Check: Data will never be perfect. Ontologies actually help surface and address quality issues.

Solution:

- Start with “good enough” data for pilot

- Use ontology constraints to define quality expectations

- Implement validation rules that flag inconsistencies

- Create feedback loops that improve data quality over time

Organizational Resistance

Challenge: “We’ve always done it this way” syndrome.

Solution:

- Lead with business value, not technology

- Identify early adopters and power users

- Show, don’t tell: demo concrete improvements

- Address concerns transparently: “This makes your job easier, not obsolete”

Scope Creep

Challenge: “While we’re at it, let’s model everything.”

Solution:

- Ruthless prioritization based on business value

- Time-box each phase with hard deadlines

- Deliver working capabilities incrementally

- Expand based on proven success, not theoretical value

Governance, Ethics, and Security: The Non-Negotiable Layer

An enterprise ontology that enables AI must embed governance directly into its architecture, not treat it as an afterthought.

Accountability and Transparency

Principle: Every AI-generated decision must be explainable and attributable.

Implementation:

- Action logging: Record who/what triggered each operation

- Decision provenance: Trace AI recommendations back through reasoning chains

- Model lineage: Document which models, data, and logic contributed to outputs

- Appeal mechanisms: Provide pathways for humans to challenge AI decisions

# Hypothetical Example: AI Decision Audit Record

decision_id: "DEC-2026-00847392"

timestamp: "2026-01-01T14:32:07Z"

decision_type: "CREDIT_APPROVAL"

subject: "Customer-47382"

outcome: "DENIED"

reasoning_chain:

1. ontology_rule: "CreditPolicy.high_risk_threshold"

evaluation: "customer.debt_ratio > 0.65"

result: TRIGGERED

2. ai_model: "credit_risk_v3.2"

inputs:

- credit_score: 620

- payment_history: [late, late, ontime, late]

- income_stability: "variable"

prediction:

default_probability: 0.73

confidence: 0.89

3. contextual_factors:

- recent_job_change: true (risk factor)

- existing_customer: false (no history)

- economic_conditions: "stable"

4. approval_authority: "AUTOMATED" (within policy limits)

explainability:

primary_reason: "Debt-to-income ratio exceeds policy threshold"

contributing_factors:

- "High predicted default risk (73%)"

- "Recent late payments in credit history"

appeal_process: "Contact credit review team"

appeal_deadline: "14 days"

human_review:

required: false (confidence > 0.85, within policy)

override_authority: "CREDIT_MANAGER"Compliance by Design

Principle: Regulatory requirements are first-class constraints in the ontology, not external checks.

Implementation:

- Encode regulations as ontology rules

- Validate all actions against compliance constraints before execution

- Generate audit trails automatically as operations occur

- Support regulatory reporting through semantic queries

For example, GDPR’s “right to be forgotten” becomes:

from datetime import datetime

class DataDeletionHandler:

def process_deletion_request(self, customer_id):

# Ontology understands what "customer data" means across systems

affected_entities = self.ontology.find_related(

entity_type="Customer",

entity_id=customer_id,

relationship_types=["owns", "created", "associated_with"]

)

# Compliance rules dictate what must be deleted vs. anonymized

deletion_plan = self.ontology.apply_compliance_rules(

affected_entities,

regulation="GDPR",

operation="RIGHT_TO_BE_FORGOTTEN"

)

# Execute with audit trail

results = self.execute_deletion(deletion_plan)

return ComplianceReport(

request_id=customer_id, # Using customer_id as request identifier

entities_deleted=results.deleted,

entities_anonymized=results.anonymized,

retention_exceptions=results.retained_for_legal,

completion_timestamp=datetime.now(),

verification_hash=results.audit_hash

)Security and Privacy

Principle: Data access control is semantic, not just role-based.

Implementation:

- Permissions-aware knowledge graphs

- Attribute-based access control (ABAC) using ontology properties

- Data masking based on user context

- Privacy-preserving AI (models see only what they’re authorized to see)

# Semantic Access Control Policy

AccessPolicy:

subject: "SALES_ANALYST"

can_access:

- object_type: "Customer"

properties: [name, industry, deal_stage, revenue]

restrictions:

- exclude: [ssn, credit_card, personal_health]

- filter_by: "assigned_territory"

- object_type: "Deal"

properties: [value, stage, products, timeline]

restrictions:

- exclude: [internal_cost, margin_analysis]

- filter_by: "sales_region"

cannot_access:

- object_type: "Employee"

reason: "HR data restricted to HR department"

- object_type: "FinancialForecast"

reason: "Executive-level information"Where to Start: The Pragmatic First Steps

If you’re convinced but don’t know where to begin:

Step 1: Identify Your AI Pain Point

Where is AI failing or underdelivering today? Common answers:

- Inconsistent outputs from similar queries

- Poor adoption due to lack of trust

- High cost (token consumption, manual validation)

- Slow time-to-deployment for new capabilities

Step 2: Map the Required Context

What information would make AI more effective for that use case? This becomes your initial ontology scope:

- What business entities are involved?

- What relationships matter?

- What rules and constraints apply?

- What data sources must be integrated?

Step 3: Build Minimal Viable Ontology

Don’t try to model everything. Focus on:

- 10-20 core entity types

- Key relationships between them

- Critical business rules

- Integration with 2-3 essential data sources

Step 4: Deploy One AI Capability

Prove the value:

- Natural language query tool

- Automated recommendation system

- Decision support assistant

- Anomaly detection with context

Step 5: Measure and Iterate

Track concrete improvements:

- Accuracy gains

- Time savings

- User adoption rates

- Cost reductions

Use success to build momentum for broader adoption.

The Fulcrum for Enterprise AI Success

If AI is a lever that amplifies organizational capability, the ontology is the fulcrum that determines whether that lever moves mountains or simply tilts at windmills.

Organizations that invest in well-formed enterprise ontologies are building sustainable competitive advantages. They’re not just deploying AI: they’re creating intelligent systems that:

- Understand the full context of business operations

- Make decisions that align with organizational policies and values

- Explain their reasoning in terms business users understand

- Adapt as the business evolves

- Operate under governance frameworks that ensure trust and compliance

The alternative? AI initiatives that deliver impressive demos but fail in production. Models that produce outputs nobody trusts. Integrations that break with every schema change. Governance frameworks that audit yesterday’s decisions while today’s AI runs unchecked.

Your lack of an ontology won’t just slow your AI adoption. It will make AI adoption dangerous, a boondoggle that consumes resources while delivering fragmented, unreliable, and ultimately unusable capabilities.

The enterprises that recognize this aren’t waiting for perfect conditions. They’re starting now, building pragmatically, learning continuously, and establishing the semantic foundations that will define competitive advantage in an AI-driven future.

The question isn’t whether you need an ontology for enterprise AI. The question is whether you’ll build it before or after your first multi-million dollar AI failure.

Getting Started: Practical Resources

Standards and Frameworks:

- W3C RDF/OWL for semantic modeling

- Property graph databases (Neo4j, AWS Neptune) for operational ontologies

- Knowledge Graph tooling (Palantir Foundry, eccenca Corporate Memory, Graphwise)

Learning Resources:

- “Knowledge Graphs and Semantic Technologies as Pillars of Enterprise AI” (eccenca, 2024)

- “Trustworthy Enterprise AI: A White Paper on Ontologies and Knowledge Graphs” (Cyberhill Partners, 2024)

- Palantir Foundry documentation on ontology modeling

- GraphRAG research papers on efficiency improvements

First Steps:

- Audit your current AI initiatives: where are they struggling?

- Identify one high-value use case where context is the bottleneck

- Assemble a cross-functional team (domain experts, data engineers, AI practitioners)

- Model the minimum viable ontology for that use case

- Build, test, measure, iterate

The future of enterprise AI is semantic, governed, and explainable. The foundation is ontology. The time to start is now.

If AI is a lever to amplify your organization’s capabilities, ontology is the fulcrum that determines whether you move mountains or just make noise. Choose wisely.

References and Further Reading

- Palantir Technologies: “Palantir Ontology Overview” - https://www.palantir.com/platforms/ontology/

- Cognizant: “The Power of Ontology in Palantir Foundry” (2024) - https://www.cognizant.com/us/en/the-power-of-ontology-in-palantir-foundry

- eccenca: “Knowledge Graphs and Semantic Technologies as Pillars of Enterprise AI” (2024) - https://eccenca.com/blog/article/knowledge-graphs-and-semantic-technologies-as-pillars-of-enterprise-ai

- Cyberhill Partners: “Trustworthy Enterprise AI: A White Paper on Ontologies and Knowledge Graphs” (2024) - https://cyberhillpartners.com/enterprise-ai-ontologies-knowledge-graphs/

- Graphwise: “Strategic Semantic Layer for Data and AI Success” (2024) - https://graphwise.ai/blog/graphwise-unlocking-enterprise-potential-the-strategic-power-of-a-semantic-layer/

- Enterprise Knowledge: “Graph Analytics in the Semantic Layer: Architectural Framework for Knowledge Intelligence” (2024) - https://enterprise-knowledge.com/graph-analytics-in-the-semantic-layer-architectural-framework-for-knowledge-intelligence/

- TigerGraph: “Reducing AI Hallucinations: Why LLMs Need Knowledge Graphs for Accuracy” (2024) - https://www.tigergraph.com/blog/reducing-ai-hallucinations-why-llms-need-knowledge-graphs-for-accuracy/

- ACL Anthology: “GraphRAG: Leveraging Graph-Based Efficiency to Minimize Hallucinations” (2025) - https://aclanthology.org/2025.genaik-1.6.pdf

- Semantic Arts: “Best Practices and Schools of Ontology Design” (2024) - https://www.semanticarts.com/the-data-centric-revolution-best-practices-and-schools-of-ontology-design/

- LEADing Practice: “Enterprise Ontology Engineering Standards” (2024) - https://www.leadingpractice.com/enterprise-standards/enterprise-engineering/enterprise-ontology/